An Architecture for Provisioning In-Network Computing Enabled Slices for Holographic Applications in Next Generation Networks

Introduction

The increasing popularity of immersive and real-time holographic applications (HAs) can be attributed to their spatial awareness, proximity, and sense of presence, which provide a highly engaging and interactive user experience surpassing traditional multimedia. Delivering immersive and real-time holographic applications (HAs) requires interconnected holographic components, distributed across networks to reach end-users. Each component has a specific role in executing holographic functions, such as encoding, transcoding, decoding, or rendering. However, provisioning HAs poses a significant challenge as the current network infrastructure struggles to meet the demanding requirements of HAs, including high bandwidth and ultra-low latency. In this context, In-Network Computing (INC) has gained significant attention as a compelling solution for enhancing the capabilities of existing network infrastructure. By harnessing the power of programmable devices such as routers, switches, and other data-handling devices capable of running software, INC enables the execution of computing tasks within the network. This approach brings application-layer processing closer to the network data plane, enabling in-transit traffic processing and reducing data transmission.

Proposed architecture and workflow

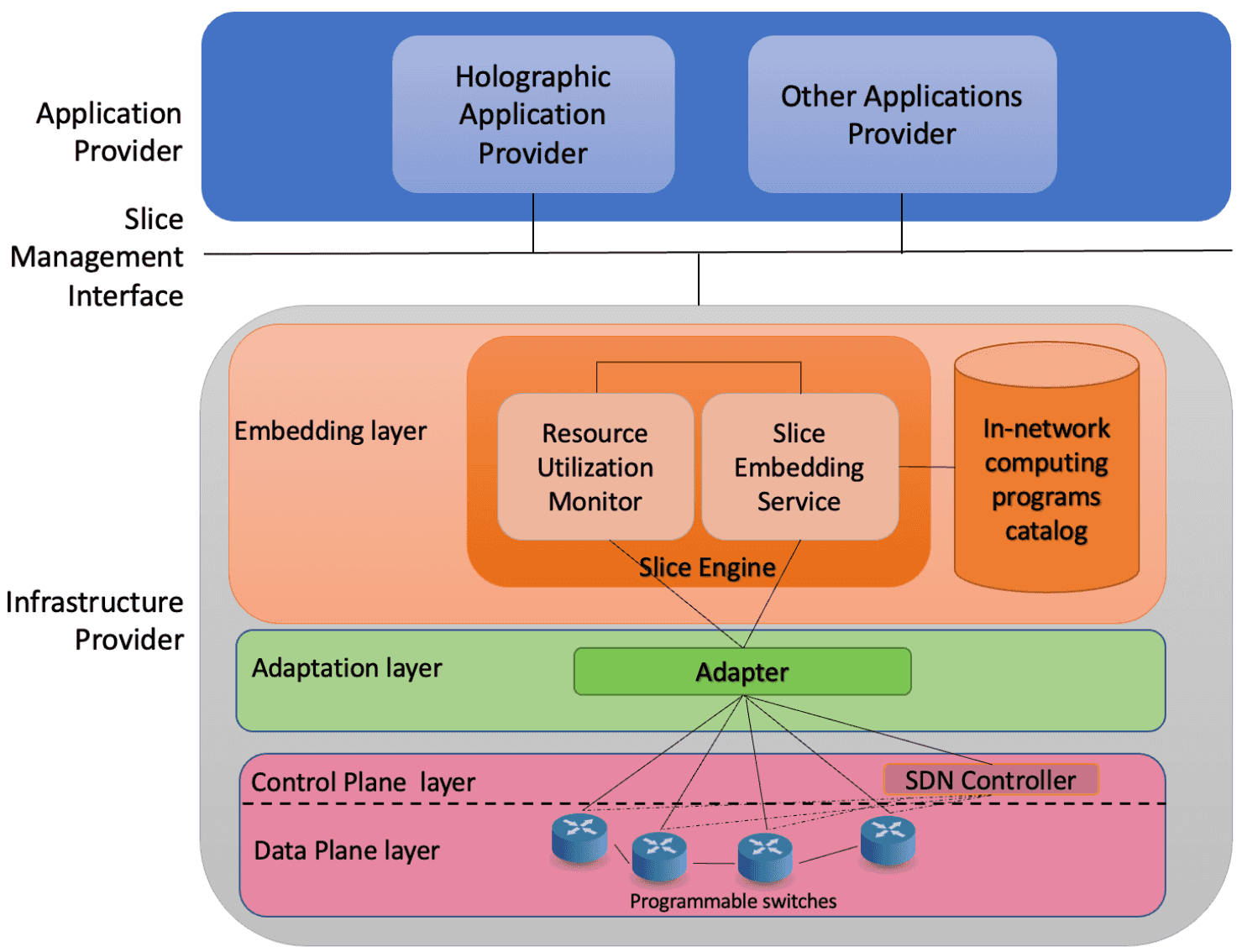

We propose an architectural proposal, depicted in Figure 1, that provides a detailed overview of the modules required for provisioning In-Network Computing (INC)-enabled slices. The ultimate objective is to facilitate the reduction of latency and network load.

Figure 1: INC Proposed Architecture.

The slice management interface serves as a link between the holographic application provider and the infrastructure provider. It allows for crucial operations such as slice creation, updating, and deletion. This interface plays a vital role in enabling efficient management of the INC-enabled slices. The infrastructure is structured into four layers: the data plane, control plane, adapter layer, and slice embedding layer, arranged in a bottom-to-top order. The data plane encompasses switches, all assumed to be In-Network Computing (INC)-enabled, capable of being programmed, hosting, and executing INC applications. The control plane involves an SDN controller responsible for managing and controlling the network. The adapter layer, positioned as the second layer, is designed to handle the heterogeneous nature anticipated in next-generation networks. Its primary purpose is to address this heterogeneity by providing a standardized interface to the slice embedding layer, which acts as the initial layer in the network architecture. Within the slice embedding layer, two components are present: the INC program catalog and the slice engine. The INC program catalog serves as a repository for INC programs, while the slice engine comprises the resource utilization monitor and the slice embedding service. The resource utilization monitor collects performance indicators to ensure that the requirements specified by the application providers are met. The slice embedding service, on the other hand, is responsible for the actual embedding process and enabling INC functionality.

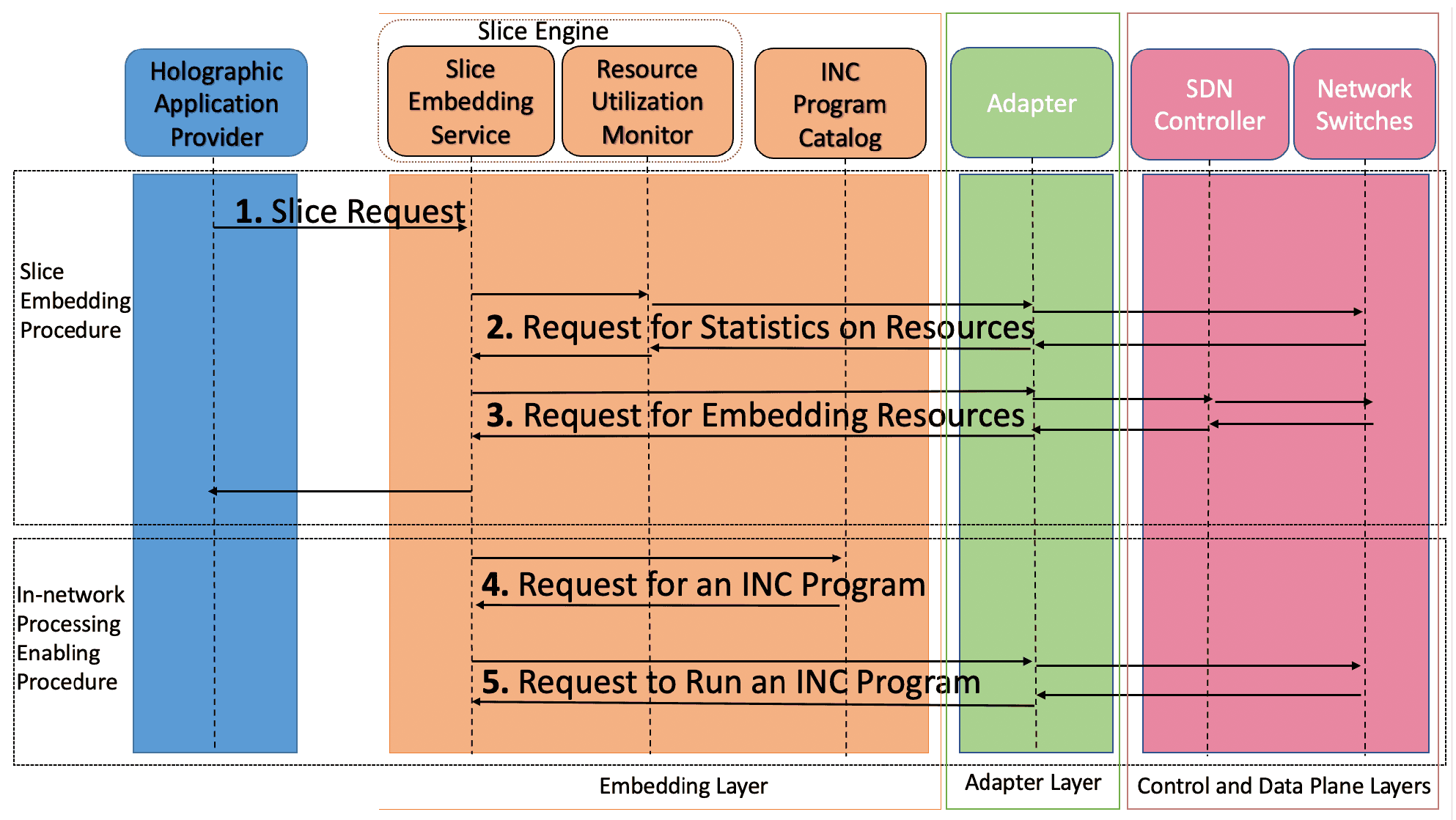

Figure 2: Sequence Diagram of the Slice Embedding and INC Enabling Procedures.

To showcase the benefits of our architectural proposal, we leverage the holographic concert use case for demonstration purposes. In this context, the slice embedding service is pivotal in embedding a requested slice and enabling INC functionality. Figure 2 provides a detailed visualization of the interactions involved in the slice embedding service. The sequence initiates with (1) a request from the holographic concert provider to the slice embedding service, triggering the creation of a new slice. This request encompasses various requirements, including the required link bandwidth and latency, the maximum number of remote attendees, and the localization of remote attendees, particularly for holographic conferences. Following this, the slice embedding service (2) requests the resource utilization monitor service to obtain performance indicators, such as CPU and link statistics. The adapter then receives a request from the utilization monitor service to collect performance indicators directly from programmable devices directly or via SDN controllers, capturing switch statistics. The adapter plays a crucial role in mapping our request to different SDN controllers and programmable switches, enabling the accommodation of diverse physical infrastructures, even in heterogeneous environments. Based on the available infrastructure resources, the embedding algorithm (3) makes decisions regarding the embedding of the slice. Finally, a slice creation notification is sent from the slice embedding service to the holographic application provider, indicating the successful creation of the requested slice. Following the completion of the slice creation procedure, the INC enabling procedure commences. In this phase (4), the slice embedding service selects the appropriate INC program, such as transcoder, from the INC program catalog. Subsequently, the slice embedding service (5) executes another algorithm to determine the deployment of the INC program on the switches. This decision-making process considers objectives such as achieving ultra-low latency and high bandwidth, while also considering the limited processing capability of the programmable devices.

Demonstration Scenario

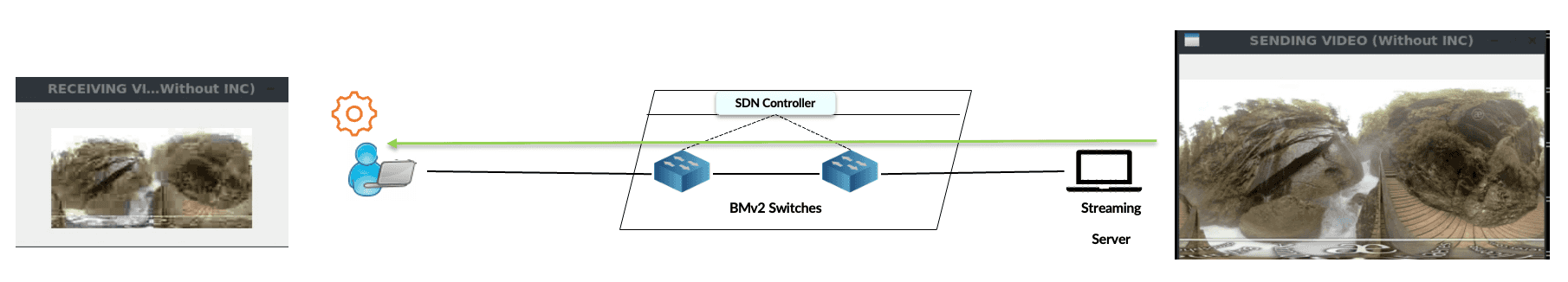

Figure 3: Streaming Without INC.

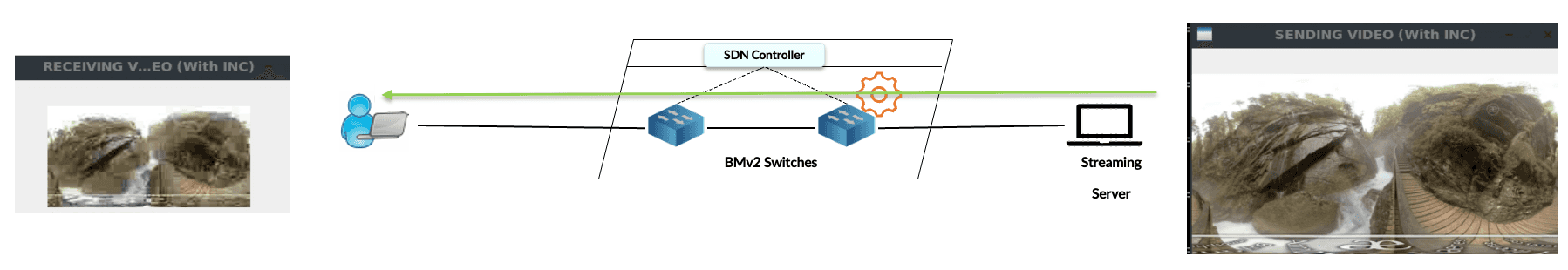

Figure 4: Streaming With INC.

Note For ease of presentation, our demonstration features the streaming of a 360° video. Nonetheless, the same underlying principles apply to the streaming of holographic content.The main objective of the demonstration is to investigate the effect of INC on network load through the evaluation of two distinct use cases. Both scenarios are simultaneously executed within the demonstration workflow. Use case 1, shown in Figure 3, involves streaming without leveraging INC, with transcoding performed at the client end. Contrastingly, use case 2, highlighted in Figure 4, focuses on streaming augmented with the use of INC. In this context, the transcoding process occurs within the network itself. Our simulations run on a PC outfitted with a dual 2X8-Core 2.50GHz Intel Xeon CPU E5-2450v2 and 80GB of RAM. Within this setup, we construct the physical layer of the architecture using the Mininet emulator, in which we harness the capabilities of the BMv2 model switches as an INC-enabled switch. We accomplish INC-based video transcoding via a P4-based program. In order to monitor the usage of link bandwidth, we collect bandwidth statistics by deploying probe packets at intervals of 500ms for both use cases.

Demonstration

In the following video, we will present the results of our experimentation regarding testing our system in an experimental test lab. We will cover all the steps we experienced during this phase.